Tutorial #1: Apache Spark in a Nutshell

Synopsis

This tutorial offers a whirlwind tour of important Apache Spark concepts. There is no hands-on work involved.

Tutorial Goals

- You will understand what Spark is, and how it might satisfy your data processing requirements.

Section Links

⇖ What Is Spark?

Apache Spark is an open-source framework for distributed data processing. A developer that uses the Spark ecosystem in an application can focus on his or her domain-specific data processing business case, while trusting that Spark will handle the messy details of parallel computing, such as data partitioning, job scheduling, and fault-tolerance. This separation of concerns supports the flexibility and scalability needed to handle massive volumes of data efficiently.

Spark is deployed as a cluster consisting of a master server and many worker servers. The master server accepts incoming jobs and breaks them down into smaller tasks that can be handled by workers. A cluster includes tools for logging and monitoring progress and status. Profiling metrics are easily accessible, and can support the decision on when to improve performance with additional workers or code changes.

Spark increases processing efficiency by implementing Resilient Distributed Datasets (RDDs), which are immutable, fault-tolerant collections of objects. RDDs are the foundation for all high-level Spark objects (such as DataFrames and Datasets) and can be built from any arbitrary data, such as a text file in Amazon S3, or an array of integers in the application itself. With Spark, your application can perform transformations on RDDs to create new RDDs, or actions that return the result of some computation. Spark transformations are "lazy", which means that they are not actually executed until an action is called. This reduces processing churn, making Spark more appropriate for iterative data processing workflows where more than one pass across the data might be needed.

Spark is an open-source project (under the Apache Software Foundation) with a healthy developer community and extensive documentation (dispelling the myth that all open-source documentation is a barren wasteland of incomplete JavaDocs). Adopting Spark provides the intrinsic benefits of open-source software (OSS), such as plentiful community support, rapid releases, and shared innovation. Spark is released under the Apache License 2.0, which tends to scare the potential customers of your application much less than some "copyleft" licenses.

⇖ What Isn't Spark?

- Spark is not a data storage solution:

Spark is a stateless computing engine that acts upon data from somewhere else. It requires some other storage provider to store your source data and saved, processed data, and supports a wide variety of options (such as your local filesystem, Amazon S3, the Hadoop Distributed File System [HDFS], or Apache Cassandra), but it does not implement storage itself. - Spark is not a Hadoop Killer:

In spite of some articles with clickbait headlines, Spark is actually a complementary addition to Apache Hadoop, rather than a replacement. It's designed to integrate well with components from the Hadoop ecosystem (such as HDFS, Hive, or YARN). Spark can be used to extend Hadoop, especially in use cases where the Hadoop MapReduce paradigm has become a limiting factor in the scalability of your solution. Conversely, you don't need to use Hadoop at all if Spark satisfies your requirements on its own. - Spark is not an in-memory computing engine:

Spark exploits in-memory processing as much as possible to increase performance, but employs complementary approaches in memory usage, disk usage, and data serialization to operate pragmatically within the limitations of the available infrastructure. Once memory resources are exhausted, Spark can spill the overflow data onto disk and continue processing. - Spark is not a miracle product:

At the end of the day, Spark is just a collection of very useful Application Programming Interfaces (APIs). It's not a shrinkwrapped product that you turn on and set loose on your data for immediate benefit. As the domain expert on your data, you still need to write the application that extracts value from that data. Spark just makes your job much easier.

⇖ How Does Spark Deliver on Its Promises?

The Apache Spark homepage touts 4 separate benefits:

- Speed: With its implementation of RDDs and the ability to cluster, Spark is much faster than a comparable job executed with Hadoop MapReduce. Additionally, Spark prefers in-memory computation wherever possible, greatly reducing file I/O as a bottleneck.

- Ease of Use: Although Spark itself was originally written in Scala, the Spark API is offered in Java, Python, R, and Scala. This greatly reduces the barrier of entry for developers who already know at least one of these languages. The official documentation includes code samples in multiple languages as well.

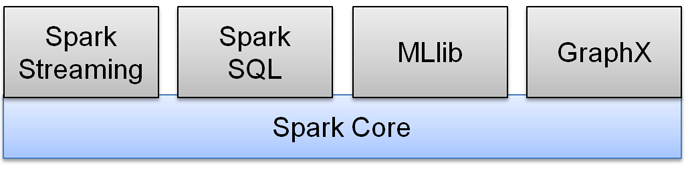

- Generality: Spark includes four targeted libraries built on top of its Core API, as shown in the image above. Spark Streaming supports streaming data sources (such as Twitter feeds) while Spark SQL provides options for exploring and mining data in ways much closer to the mental model of a data analyst. MLlib enables machine learning use cases and GraphX is available for graph computation. Previously, each of these areas might have been served by a different tool that needed to be integrated into your solution. Spark makes it easy to combine these libraries into a single application without extra integration or the need to learn the idiosyncrasies of unrelated tools. In addition, Spark Packages provides an easy way to discover and integrate third-party tools and libraries.

- Runs Everywhere: Spark focuses narrowly on the complexities of data processing, leaving several technology choices in your hands. Data can originate from many compatible storage solutions. Spark clusters can be managed by Spark's "Standalone" cluster manager or another popular manager (Apache Mesos or Hadoop YARN). Finally, Spark's cluster is very conducive to deployment in a cloud environment (with helpful scripts provided for Amazon Web Services in particular). This greatly increases the tuning options and scalability of your application.

⇖ When Should I Use Spark?

Spark is quite appropriate for a broad swath of data processing use cases, such as data transformation, analytics, batch computation, and machine learning. The generality of Spark means that there isn't a single use case it's best for. Instead, consider using Spark if some of these conditions apply to your scenario.

- You are starting a new data processing application.

- You expect to have massive datasets (on the order of petabytes).

- You expect to integrate with a variety of data sources or the Hadoop ecosystem.

- You expect to need more than one of Spark's libraries (e.g., MLlib and SQL) in the same application.

- Your data processing workflow is a pipeline of iterative steps that might be refined in multiple passes fairly dynamically.

- Your data processing workflow would be limited by the rigidity or performance of Hadoop MapReduce.

⇖ What's the Best Programming Language For Spark?

Spark applications can be written in Java, Python, R, or Scala, and the language you pick should be a pragmatic decision based on your skillset and application requirements.

- From an ease of adoption perspective, Python offers a friendly, forgiving entry point, especially if you're coming from a data science background. In 2015, Scala and Python were the most frequently used languages for Spark development, and this larger user base makes it much easier to seek out help and discover useful example code. Java is hampered by its lack of a Spark interactive shell, which allows you to test Spark commands in real-time.

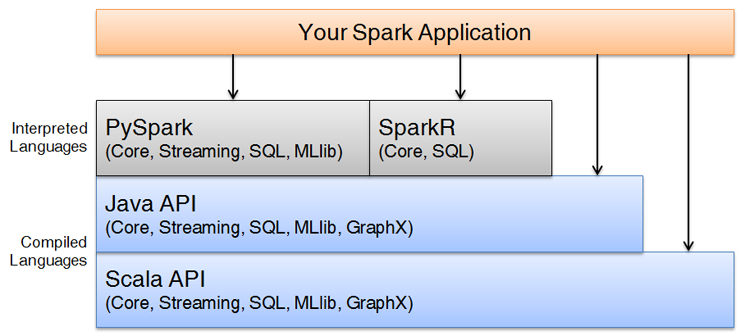

- From a feature completeness perspective, Scala is the obvious choice. Spark itself is written in Scala, and APIs in the other three languages are built on top of the Scala API, as shown in the image below. New features are published to the Scala API first, and it may take a few releases for the features to propagate up to the other APIs. R is the least feature-complete at the moment, but active development work continues to improve this situation.

- From a performance perspective, the compiled languages (Java and Scala) provide better general performance than the interpreted languages (Python and R) in most cases. However, this should not be accepted as a blanket truth without profiling, and you should be careful not to select a language solely on the basis of performance. Aim for developer productivity and code readability first, and optimize later when you have a legitimate performance concern.

My personal recommendation (as a developer with over 20 years of Java experience, a few years of Python experience, and miscellaneous dabbling in the other languages) would be to favour Python for learning and smaller applications, and Scala for enterprise projects. There's absolutely nothing wrong with writing Spark code in Java, but it sometimes feels unnecessarily inflexible, like running a marathon with shoes one size too small. Until the R API is more mature, I would only consider R if I had specific requirements for pulling parts of Spark into an existing R environment. Feel free to disregard these recommendations if they don't align with your views.

⇖ Conclusion

You have now gotten a taste of Apache Spark in theory, and are ready to do some hands-on work. In the next tutorial, Tutorial #2: Installing Spark on Amazon EC2, we install Apache Spark on an Amazon EC2 instance.

Reference Links

Change Log

- 2016-03-29: Updated with a section comparing the available programming languages (SPARKOUR-6).

Spot any inconsistencies or errors? See things that could be explained better or code that could be written more idiomatically? If so, please help me improve Sparkour by opening a ticket on the Issues page. You can also discuss this recipe with others in the Sparkour community on the Discussion page.