Configuring an S3 VPC Endpoint for a Spark Cluster

Synopsis

This recipe shows how to set up a VPC Endpoint for Amazon S3, which allows your Spark cluster to interact with S3 resources from a private subnet without a Network Address Translation (NAT) instance or Internet Gateway.

Prerequisites

- You need an AWS account and a general understanding of how AWS billing works.

- You need an EC2 instance with the AWS command line tools installed, so you can test the connection. The instance created in either Tutorial #2: Installing Spark on Amazon EC2 or Managing a Spark Cluster with the spark-ec2 Script is sufficient.

Target Versions

- This recipe is independent of any specific version of Spark or Hadoop. All work is done through Amazon Web Services (AWS).

Section Links

⇖ Introducing VPC Endpoints

Amazon S3 is a key-value object store that can be used as a data source to your Spark cluster. Normally, connections between EC2 instances in a Virtual Private Cloud (VPC) and resources in S3 require an Internet Gateway to be established in the VPC. However, you may need to deploy your Spark cluster in a private subnet where no Internet Gateway is available. In this case, you can establish a VPC Endpoint, which enables secure connections to S3 without the extra expense of a NAT instance. (Normally, a NAT instance would be needed to allow instances in the private subnet to share the Internet Gateway of a nearby public subnet).

S3 is one of several Amazon service accessible over a VPC Endpoint, and other services are expected to adopt Endpoints in the future. Using a VPC Endpoint to access S3 also improves your cluster's security posture, as traffic between the cluster and S3 never leaves the Amazon network.

⇖ Establishing the VPC Endpoint

- Login to your AWS Management Console and select the VPC service.

- Navigate to Endpoints in the left side menu, and then select Create Endpoint at the top of the page. This starts a wizard workflow to create a new Endpoint.

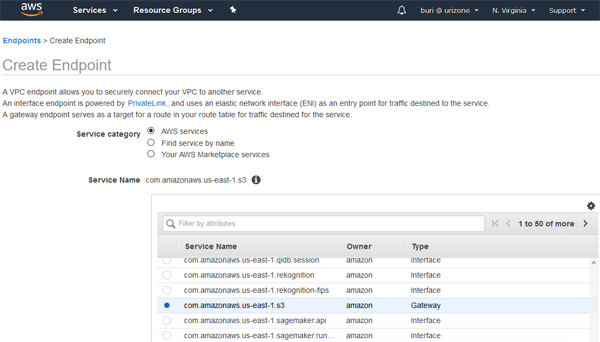

- Select com.amazonaws.us-east-1.s3 as the AWS Service Name, as shown

in the image below.

- Set the VPC to the VPC containing your Spark cluster or other EC2 instances. In this example, we're using the Default VPC provided with the base AWS account.

- Select the route table corresponding to subnets that need access to the Endpoint. In this example, the default VPC has a route table attached to the four default subnets. Any EC2 instance in any of the subnets are able to use the Endpoint, unless we explicitly configure an access control policy on the previous page.

- Like all AWS services, you can attach policies for fine-grained access control to the VPC Endpoint. You can also configure bucket policies in S3 to control access from specific Endpoints. In this example, we're using the Full Access policy on the Endpoint. The recipe, Configuring Amazon S3 as a Spark Data Source, shows how to configure bucket policies.

- Before you proceed, make sure that there is no active communication between your Spark cluster and S3. Any active connections are dropped when the Endpoint is established. When ready, select Create endpoint. You should see "The following VPC Endpoint was created" as a status message. Go to Close to return to the list of Endpoints.

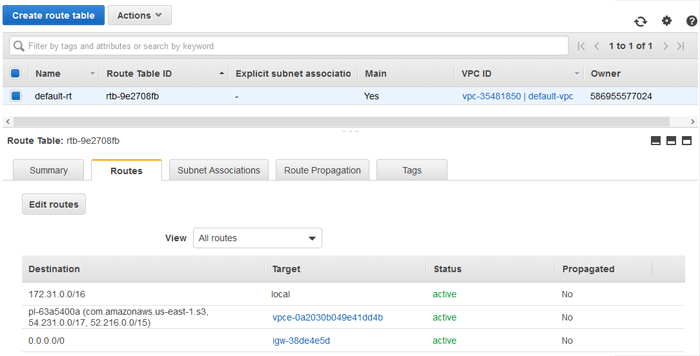

- From the VPC Dashboard, navigate to Route Tables and then select the modified route table in the dashboard. Details about the route table appear in the lower pane, as seen in the image below. Select the Routes tab.

- Routes are processed in order from most specific to least specific. In this example, there is a route for local traffic within the VPC, a new route pointing to our new S3 VPC Endpoint, and a catch-all route pointing to the Internet Gateway for all other traffic. (If you are working in a private subnet, you should not see the catch-all route).

⇖ Next Steps

The recipe, Configuring Amazon S3 as a Spark Data Source, provides instructions for setting up an S3 bucket and testing a connection between EC2 and S3. Normally, those tests would send and receive traffic through the configured Internet Gateway out of Amazon's network, and then back in to S3. Now that you have created a VPC Endpoint, it is the preferred route for such traffic. If your Spark cluster is in a private subnet, those tests should fail without a VPC Endpoint in place.

If your Spark cluster is in a public subnet and you want to confirm that the VPC Endpoint is working, you can temporarily detach the Internet Gateway from the VPC:

- Confirm that no critical applications are running that require use of the Internet Gateway.

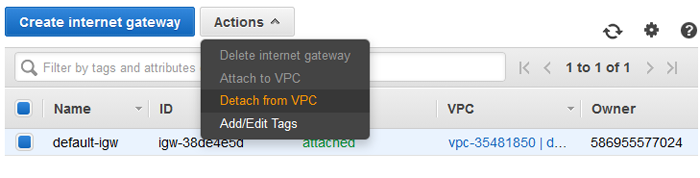

- From the VPC Dashboard, navigate to Internet Gateways in the left side menu, and then select the Internet Gateway attached to your VPC, as shown in the image below.

- Select Detach from VPC and then try the connection tests from the other recipe again. If your VPC Endpoint is configured properly, the connection between EC2 and S3 will continue to work without the Internet Gateway.

- When you have confirmed the behavior, select Attach to VPC to restore Internet connectivity to your VPC.

Reference Links

- VPC Endpoints in the Amazon VPC Documentation

- Example Bucket Policies for VPC Endpoints in the Amazon S3 Documentation

- Configuring Amazon S3 as a Spark Data Source

- Configuring Spark to Use Amazon S3

Change Log

- This recipe hasn't had any substantive updates since it was first published.

Spot any inconsistencies or errors? See things that could be explained better or code that could be written more idiomatically? If so, please help me improve Sparkour by opening a ticket on the Issues page. You can also discuss this recipe with others in the Sparkour community on the Discussion page.