Managing a Spark Cluster with the spark-ec2 Script

Synopsis

This recipe describes how to automatically launch, start, stop, or destroy a Spark cluster running in Amazon Elastic Cloud Compute (EC2) using the spark-ec2 script. It steps through the pre-launch configuration, explains the script's most common parameters, and points out where specific parameter values can be found in the Amazon Web Services (AWS) Management Console.

Prerequisites

- You need an AWS account and a general understanding of how AWS billing works. You also need to generate access keys (to run the spark-ec2 script) and create an EC2 Key Pair (to SSH into the launched cluster).

- You need a launch environment with Apache Spark and Python installed, from which you run the spark-ec2 script.

Target Versions

- The spark-ec2 script dynamically locates your target version of Spark in GitHub. Any version of Spark should work, although you will need to edit the spark-ec2.py for newer versions. Around line 57, find the array of VALID_SPARK_VERSIONS and add the version you wish to use.

- The spark-ec2 script only supports three versions of Hadoop right now: Hadoop 1.0.4, Hadoop 2.4.0, or the Cloudera Distribution with Hadoop (CDH) 4.2.0. If you have application dependencies that require another version of Hadoop, you will need to manually set up the cluster without the script. These tutorials may be useful in that case:

Section Links

⇖ Downloading the Script

The spark-ec2 script was detached from the main Spark distribution in Spark 2.0.0 and needs to be downloaded separately. If you are using an older version of Spark, you will find the script in your Spark distribution at $SPARK_HOME/ec2.

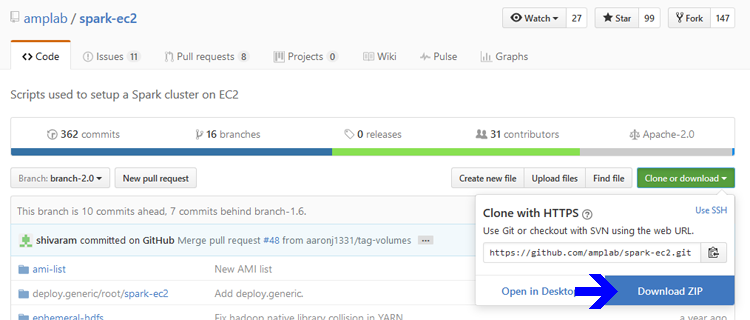

- If you are running Spark 2.x and need to download the script, visit the AMPLab spark-ec2 repository. In the Branch: dropdown menu, select branch-2.0, then open the Clone or download dropdown menu as shown in the image below. Hover the mouse over the Download ZIP button to see the download URL.

- Download the script to your launch environment and place the files into your Spark distribution.

⇖ Pre-Launch Configuration

Before running the spark-ec2 script, you must:

- Add the appropriate permissions to your AWS Identity and Access Management (IAM) user account that will allow you to run the script.

- Create an IAM Role for the instances in the generated Spark cluster that will allow them to access other AWS services.

- Make configuration decisions about how your Spark cluster should be deployed, and look up important IDs and values in the AWS Management Console.

Adding Launch Permissions

The spark-ec2 script requires permission to execute multiple AWS Actions, such as creating new EC2 instances and configuring security groups. These permissions are checked against the AWS user account used to run the script, and can be attached to a user account through the IAM service. As a best practice, you should not be using your root user credentials to run the script.

- Login to your AWS Management Console and select the Identity & Access Management service.

- Navigate to Groups in the left side menu, and then select Create New Group at the top of the page. This starts a wizard workflow to create a new Group.

- On Step 1. Group Name, set the Group Name to a value like spark-launch and go to the Next Step.

- On Step 2. Attach Policy, select AdministratorAccess to grant a pre-defined set of administrative permissions to this Group. Go to the Next Step.

- On Step 3. Review, select Create Group. You return to the Groups dashboard, and should see your new Group listed on the dashboard.

- Next, click on the name of your group to show summary details. Go to the Users tab and select Add Users to Group.

- On the Add Users to Group page, select your IAM user account and then Add Users. You return to the Groups summary detail page, and should see your user account listed in the Group. Your account can now be used to run the spark-ec2 script.

These instructions provide all of the permissions needed to run the script, but advanced users may prefer to enforce a "least privilege" security posture with a more restrictive, custom policy. An example of such a policy is shown below, but the specific list of Actions may vary depending on the parameters you pass into the script to set up your Spark cluster.

You can create and attach this policy to your Group with the IAM service instead of the AdministratorAccess policy we used above. If you encounter authorization errors when running the script with this policy, you may need to add additional AWS Actions to the policy.

Creating the IAM Role

By deploying your cluster in Amazon EC2, you gain access to other AWS service offerings such as Amazon S3 for data storage. Your cluster nodes need permission to access these services (separate from the permissions used to launch the cluster in the first place). Older Spark tutorials suggest that you pass your secret API keys to the instances as environment variables. This brittle "shared secret" approach is no longer a best practice in AWS, although it is the only way to use some older AWS integration libraries. (For example, the Hadoop library implementing the s3n protocol for loading Amazon S3 data only accepts secret keys).

A cleaner way to set this up is to use IAM Roles. You can assign a Role to every node in your cluster and then attach a policy granting those instances access to other services. No secret keys are involved, and the risk of accidentally disseminating keys or committing them in version control is reduced. The only caveat is that the IAM Role must be assigned when an EC2 instance is first launched. You cannot assign an IAM Role to an EC2 instance already in its post-launch lifecycle.

For this reason, it's a best practice to always assign an IAM Role to new instances, even if you don't need it right away. It is much easier to attach new permission policies to an existing Role than it is to terminate the entire cluster and recreate everything with Roles after the fact.

- Go to the Identity & Access Management service, if you are not already there.

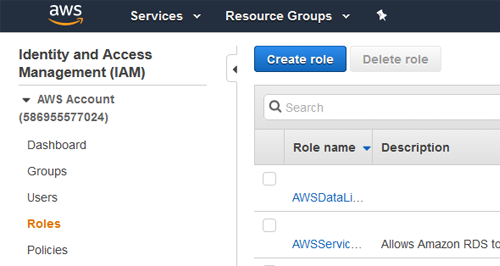

- Navigate to Roles in the left side menu, and then select Create role. This starts a wizard workflow to create a new role.

- On Step 1. Create role, select AWS service as the type of trusted entity. Choose EC2 as the service that will use this role. Select Next: Permissions.

- On Step 2. Create role, do not select any policies. (We add policies in other recipes when we need our instance to access other services). Go to the Next: Tags.

- On Step 3. Create role, do not create any tags. Go to the Next: Review.

- On Step 4. Create role, set the Role name to a value like sparkour-app. Select Create role. You return to the Roles dashboard, and should see your new role listed on the dashboard.

- Exit this dashboard and return to the list of AWS service offerings by selecting the "AWS" icon in the upper left corner.

Gathering VPC Details

Next, you need to gather some information about the Virtual Private Cloud (VPC) where the cluster will be deployed. Setting up VPCs is outside the scope of this recipe, but Amazon provides many default components so you can get started quickly. Below is a checklist of important details you should have on hand before you run the script.

- Which AWS Region will I deploy into? You need the unique Region key for your selected region.

- Which VPC will I deploy into? You need the VPC ID of your VPC (the default is fine if you do not want to create a new one). From the VPC dashboard in the AWS Management Console, you can find this in the Your VPCs table.

- What's the IP address of my launch environment? The default Security Group created by the spark-ec2 script punches a giant hole in your security boundary by opening up many Spark ports to the public Internet. While this is useful for getting things done quickly, it's very insecure. As a best practice, you should explicitly specify an IP address or IP range so your cluster isn't immediately open to the world's friendliest hackers.

⇖ Script Parameters

The spark-ec2 script exposes a variety of configuration options. The most commonly used options are described below, and there are other options available for advanced users. Calling the script with the --help parameter displays the complete list.

- Identity Options

- AWS_SECRET_ACCESS_KEY / AWS_ACCESS_KEY_ID: These credentials are tied to your AWS user account, and allow you to run the script. You can create new keys if you have lost the old ones, by visiting the IAM User dashboard, selecting a user, selecting the Security credentials tab, and choosing Create access key. We merely set them as environment variables as a best practice, so they are not stored anywhere on the machine containing your script.

- --key-pair / --identity-file: These credentials allow the script to SSH into the cluster instances. You can create an EC2 Key Pair if you haven't already. The identity file must be installed in the launch environment where the script is run and must have permissions of 400 (readable only by you). Use the absolute path in the parameter.

- --copy-aws-credentials: Optionally allow the cluster to use the credentials with which you ran the script when accessing Amazon services. This is a brittle security approach, so only use it if you rely on a legacy library that doesn't support IAM Roles.

- VPC Options

- --region: Optionally set the key of the region where the cluster will live (defaults to US East).

- --vpc-id: The ID of the VPC where the instances will be launched.

- --subnet-id: Optionally set the ID of the Subnet within the VPC where the instances will be launched.

- --zone: Optionally set the ID of an Availability Zone(s) to launch in (defaults to a randomly chosen zone). You can also set to all for higher availability at greater cost.

- --authorized-address: The whitelisted IP range used in the generated Security Group (defaults to the public Internet as 0.0.0.0/0, so you should always specify a value for better security).

- --private-ips: Optionally set to True for VPCs in which public IP addresses are not automatically assigned to instances. The script tries to connect to the cluster to configure it through Public DNS Names by default, and setting this to True forces the use of Private IPs instead (defaults to False).

- Cluster Options

- --slaves: Optionally set the number of slaves to launch (defaults to 1).

- --instance-type: Optionally set the EC2 instance type from a wide variety of options based on your budget and workload needs (defaults to m1.large, which is a deprecated legacy type).

- --master-instance-type: Optionally set a different instance type for the master (defaults to the overall instance type for the cluster).

- --master-opts: Optionally pass additional configuration properties to the master.

- --spark-version: Optionally choose the version of Spark to install. During the launch, the script will download and build the desired version of Spark from a configurable GitHub repository.

- --spark-git-repo / --spark-ec2-git-repo / --spark-ec2-git-branch: Optionally specify an alternate location for downloading Spark and Amazon Machine Images (AMIs) for the instances (defaults to the official GitHub repository).

- --hadoop-major-version: Optionally set to 1 for Hadoop 1.0.4, 2 for CDH 4.0.2, or yarn for Hadoop 2.4.0 (defaults to 1). No other versions are currently supported.

- Instance Options

- --instance-profile-name: Optionally set to the unique name of an IAM Role to assign to each instance.

- --ami: Optionally specify an AMI ID to install on the instances (defaults to the best-fit AMI from the official GitHub repository, based upon your selected instance type).

- --user-data: Optionally specify a startup script (from a location on the machine running the spark-ec2 script). If specified, this script will run on each of the cluster instances as an initialization step.

- --additional-tags: Optionally apply AWS tags to these instances. Tag keys and values are separated by a colon, and you can pass in a comma-separated list of tags: name:value,name2:value2.

- --instance-initiated-shutdown-behavior: Optionally set to stop to stop instances when stopping the cluster, or terminate to permanently destroy instances when stopping the cluster (defaults to stop).

- --ebs-vol-size / --ebs-vol-type / --ebs-vol-num: Optionally configure Elastic Block Store (EBS) Volumes to be created and attached to each of the instances.

⇖ Managing the Cluster

Launching the Cluster

Below is an example run of the spark-ec2 script. Refer to the previous section for an explanation of each parameter.

It is useful to remember the 4 different roles a Spark server can play. In the following steps, we are running the script from our launch environment (which is probably also our development environment). The result of the script is a master and a worker, on two new EC2 instances.

- Run the script from your launch environment, replacing the example parameters above with your own values. You should see output like the following:

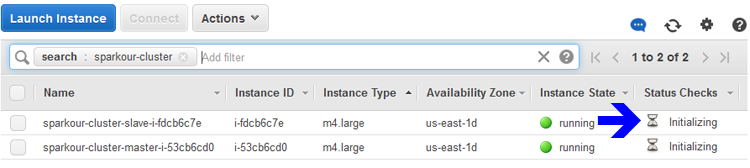

- It may take several minutes to pass this point, during which the script will periodically attempt to SSH into the instances using their Public DNS names. These attempts will fail until the cluster has entered the 'ssh-ready' state. You can monitor progress on the EC2 Dashboard -- when the Status Checks succeed, the script should be able to SSH successfully. (It has taken my script as long as 16 minutes for SSH to connect, even after the Status Checks are succeeding, so patience is a requirement).

- After this point, the script uses SSH to login to each of the cluster nodes, configure directories, and install Spark, Hadoop, Scala, RStudio and other dependencies. There will be several pages of log output, culminating in these lines:

If your script is unable to use SSH even after the EC2 instances have succeeded their Status Checks, you should troubleshoot the connection using the ssh command in your launch environment, replacing <sparkNodeAddress> with the hostname or IP address of one of the Spark nodes.

Here are some troubleshooting paths to consider:

- If you are running the spark-ec2 script from an EC2 instance, does its Security Group allow outbound traffic to the Spark cluster?

- Does the Spark cluster's generated Security Group allow inbound SSH traffic (port 22) from your launch environment?

- Can you manually SSH into a Spark cluster node from your launch environment over an IP address?

- Can you manually SSH into a Spark cluster node from your launch environment over a Public DNS Name? A failure here may suggest that your Spark cluster does not automatically get public addresses assigned (in which case you can use the --private-ips parameter), or your launch environment cannot locate the cluster over its DNS service.

Don't know about Spark version: <version>

This message appears when you specify a spark-version parameter that is not in the list of values known to the script. The script is not always kept up to date, and may not know about the most recent releases of Spark. To fix this, edit the script, $SPARK_HOME/ec2/spark_ec2.py. Around line 57, find the array of VALID_SPARK_VERSIONS and add the version you wish to use. This value is used to build the URL to the GitHub repository containing the version of Spark to download and deploy.

Validating the Cluster

- From a web browser, open the Master UI URL for the Spark cluster (shown above on port 8080). It should show a single worker in the ALIVE state. If you are having trouble hitting the URL, examine the master's Security Group in the EC2 dashboard and make sure that an inbound rule allows access from the web browser's location to port 8080.

- From the EC2 dashboard, select each instance in the cluster. In the details pane, confirm that the IAM Role was successfully assigned.

- Next, try to SSH into the master instance. You can do this from the launch environment where you ran the spark-ec2 script, or use a client like PuTTY that has been configured with the EC2 Key Pair. When logged into the master, you will find the Spark libraries installed under /root/.

- Finally, try running the Spark interactive shell on the master instance, connecting to the cluster. You can copy the Master URL from the Master UI and then start a shell with that value.

- When you refresh the Master UI, the interactive shell should appear as a running application. Your cluster is alive! You can quit or exit your interactive shell now.

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster.

Controlling the Cluster

- To start or stop the cluster, run these commands (from your launch environment, not the master):

- To permanently destroy your cluster, run this command (from your launch environment, not the master):

- Destroying a cluster does not delete the generated Security Groups by default. If you try to manually delete them, you will run into trouble because they reference each other. Remove all of the inbound rules from each Security Group and you can delete them successfully.

Remember that while you only incur costs for running EC2 instances, you are billed for all provisioned EBS Volumes. Charges stop when the Volumes are terminated, and this occurs by default when you terminate the EC2 instances for which they were created.

Reference Links

- Running Spark on EC2 in the Spark Programming Guide

- Amazon IAM User Guide

- Amazon EC2 User Guide

Change Log

- 2016-06-28: Updated with additional detail on required permissions and SSH troubleshooting (SPARKOUR-7).

- 2016-09-20: Updated for Spark 2.0.0. Code may not be backwards compatible with Spark 1.6.x (SPARKOUR-18).

- 2018-05-27: Added error information about unknown Spark versions.

Spot any inconsistencies or errors? See things that could be explained better or code that could be written more idiomatically? If so, please help me improve Sparkour by opening a ticket on the Issues page. You can also discuss this recipe with others in the Sparkour community on the Discussion page.