Tutorial #3: Managing Spark Clusters

Synopsis

This tutorial describes the tools available to manage Spark in a clustered configuration, including the official Spark scripts and the web User Interfaces (UIs). We compare the different cluster modes available, and experiment with Local and Standalone mode on our EC2 instance. We also learn how to connect the Spark interactive shells to different Spark clusters and conclude with a description of the spark-ec2 script.

Prerequisites

- You need a running EC2 instance with Spark installed, as described in Tutorial #2: Installing Spark on Amazon EC2. If you have stopped and started the instance since the previous tutorial, you need to make note of its new dynamic Public IP address.

Tutorial Goals

- You will be able to visualize a Spark cluster and understand the difference between Local and Standalone Mode.

- You will know how to manage masters and slaves from the command line, Master UI, or Worker UI.

- You will know how to run the interactive shells against a specific cluster.

- You will be ready to create a real Spark cluster with the spark-ec2 script.

Section Links

⇖ Clustering Concepts

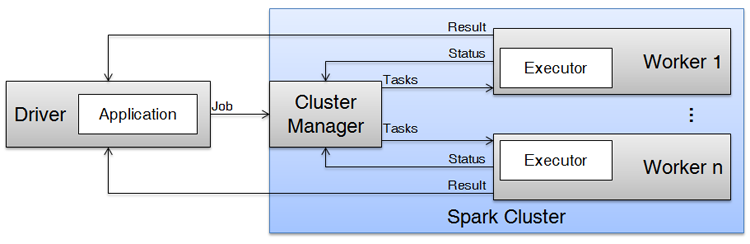

A key feature of Spark is its ability to process data in a parallel, distributed fashion. Breaking down a processing job into smaller tasks that can be executed in parallel by many workers is critical when dealing with massive volumes of data, but this introduces new complexity in the form of task scheduling, monitoring, failure recovery. Spark abstracts this complexity away, leaving you free to focus on your data processing. Key clustering concepts are shown in the image below.

- A Spark cluster is a collection of servers running Spark that work together to run a data processing application.

- A Spark cluster has some number of worker servers (informally called the "slaves") that can perform tasks for the application. There's always at least 1 worker in a cluster, and more workers can be added for increased performance. When a worker receives the application code and a series of tasks to run, it creates executor processes to isolate the application's tasks from other applications that might be using the cluster.

- A Spark cluster has a cluster manager server (informally called the "master") that takes care of the task scheduling and monitoring on your behalf. It keeps track of the status and progress of every worker in the cluster.

- A driver containing your application submits it to the cluster as a job. The cluster manager partitions the job into tasks and assigns those tasks to workers. When all workers have finished their tasks, the application can complete.

Spark comes with a cluster manager implementation referred to as the Standalone cluster manager. However, resource management is not a unique Spark concept, and you can swap in one of these implementations instead:

- Apache Mesos is a general-purpose cluster manager with fairly broad industry adoption.

- Apache Hadoop YARN (Yet Another Resource Navigator) is the resource manager for Hadoop 2, and may be a good choice in a Hadoop-centric ecosystem.

We focus on Standalone Mode here, and explore these alternatives in later recipes.

The Roles of a Spark Server

A server with Spark installed on it can be used in four separate ways:

- As a development environment, you use the server to write applications for Spark and then use the spark-submit script to submit your applications to a Spark cluster for execution.

- As a launch environment, you use the server to run the spark-ec2 script, which launches a new Spark cluster from scratch, starts and stops an existing cluster, or permanently terminates the cluster.

- As a master node in a cluster, this server is responsible for cluster management and receives jobs from a development environment.

- As a worker node in a cluster, this server is responsible for task execution.

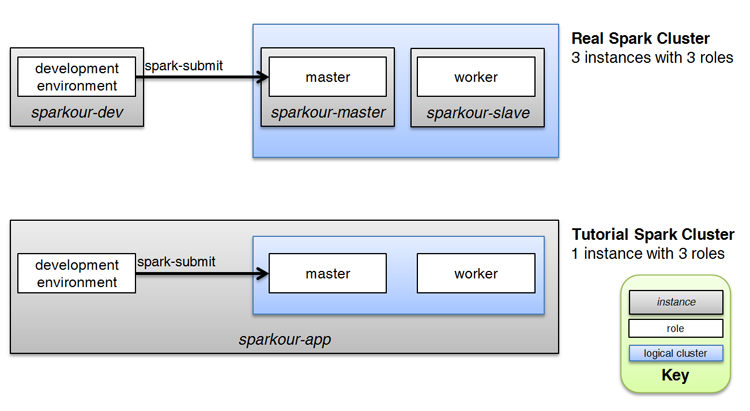

Sometimes, these roles overlap on the same server. For example, you probably use the same server as both a development environment and a launch environment. When you're doing rapid development with a test cluster, you might reuse the master node as a development environment to reduce the feedback loop between compiling and running your code. Later in this tutorial, we reuse the same server to create a cluster where a master and a worker are running on the same EC2 instance -- this demonstrates clustering concepts without forcing us to pay for an additional instance.

When you document or describe your architecture for others, you should be clear about the logical roles. For example, "Submitting my application from my local server A to the Spark cluster on server B" is much clearer than "Running my application on my Spark server" and guaranteed to elicit better responses when you inevitably pose a question on Stack Overflow.

⇖ Local and Standalone Mode

Local Mode

Juggling the added complexity a Spark cluster may not be a priority when you're just getting started with Spark. If you are developing a new application and just want to execute code against small test datasets, you can use Spark's Local mode. In this mode, your development environment is the only thing you need. An ephemeral Spark engine is started when you run your application, executes your application just like a real cluster would, and then goes away when your application stops. There are no explicit masters or slaves to complicate your mental model.

By default, the Spark interactive shells run in Local mode unless you explicitly specify a cluster. A Spark engine starts up when you start the shell, executes the code that you type interactively, and goes away when you exit the shell. To mimic the idea of adding more workers to a Spark cluster, you can specify the number of parallel threads used in Local mode:

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster.

Standalone Mode

"Running in Standalone Mode" is just another way of saying that the Spark Standalone cluster manager is being used in the cluster. The Standalone cluster manager is a simple implementation that accepts jobs in First In, First Out (FIFO) order: applications that submit jobs first are given priority access to workers over applications that submit jobs later on. Starting a Spark cluster in Standalone Mode on our EC2 instance is easy to do with the official Spark scripts.

Normally, a Spark cluster would consist of separate servers for the cluster manager (master) and each worker (slave). Each server would have a copy of Spark installed, and a third server would be your development environment where you write and submit your application. In the interests of limiting the time you spend configuring EC2 instances and reducing the risk of unexpected charges, this tutorial creates a minimal cluster using the same EC2 instance as the development environment, master, and slave, as shown in the image below.

In a real world situation, you would launch as many worker EC2 instances as you need (and you would probably forgo these manual steps in favour of automation with the spark-ec2 script).

- Login to your EC2 instance. Run these commands to start a Standalone master.

- The output of this command identifies a log file location. Open this log file in your favourite text editor.

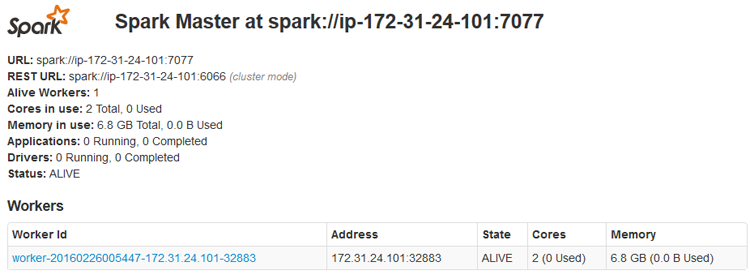

- On line 2 of the log output above, you can see the unique URL for this master, spark://ip-172-31-24-101:7077. This is the URL you specify when you want your application (or the interactive shells) to connect to this cluster. This is also the URL that slaves use to register themselves with the master. The hostname or IP address in this URL must be network-accessible from both your application and each slave.

- On line 5 of the log output above, you can see the URL for the Master UI, http://172.31.24.101:8080. This URL can be opened in a web browser to monitor tasks and workers in the cluster. Spark defaults to the private IP address of our EC2 instance. To access the URL from our local development environment, we need to replace the IP with the Public IP of the EC2 instance when we load it in a web browser.

- Copy the URLs into a text file so you can use them later. Then, exit your text editor and return to the command line.

- Next, we'll start up a single slave on the same EC2 instance. Remember that a real cluster would have a separate instance for each slave -- we are intentionally overloading this instance for training purposes. The start-slave.sh script requires the master URL from your log file.

- The output of this command identifies a log file location. Open this log file in your favourite text editor.

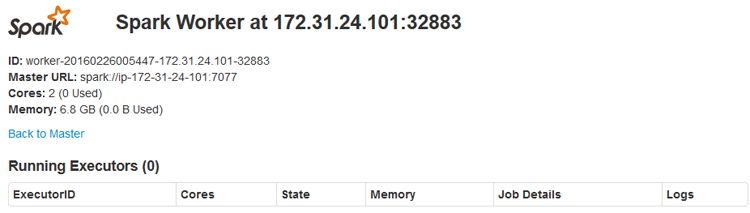

- On line 6 of the log output above, you can see the URL for the Worker UI, http://172.31.24.101:8081. This URL can be opened in a web browser to observe the worker. Just like before, Spark defaults to the private IP address of our EC2 instance. To access the URL from our local development environment, we need to replace the IP with the Public IP of the EC2 instance.

- Once you have copied this URL, exit your text editor and return to the command line.

- You now have a minimal Spark cluster (1 master and 1 slave) running on your EC2 instance, and three URLs copied into a scratch file for later.

There are several environment variables and properties that can be configured to control the behavior of the master and slaves. For example, we could explicitly set the hostnames used in the URLs to our EC2 instance's public IP, to guarantee that the URLs would be accessible from outside of the Amazon Virtual Private Cloud (VPC) containing our instance. Refer to the official Spark documentation on Spark Standalone Mode for a complete list.

⇖ The Master and Worker UI

Each member of the Spark cluster has a web-based UI that allows you to monitor running applications and executors in real time.

- From your local development environment, open two web browser windows or tabs and paste in the links to the Master and Worker UI you previously copied out of the log files. You should see the web pages shown in the images below.

- If you cannot access the links, make sure that you are using the public IP address of your EC2 instance, rather than the private IP displayed in the log files. Also make sure that the IP address of your local development environment has not changed since you set up the Security Group rules in the previous recipe. If your IP has changed, you need to update the Security Group rules to allow access from your new IP. You can update each Inbound rule from the EC2 dashboard in the AWS Management Console.

- Keep the UI pages open as you complete this recipe. Periodically refresh the pages and you should see updates to the status of various cluster components.

⇖ Running the Interactive Shells with a Cluster

Now that you have a Spark cluster running, you can connect to it with the interactive shells. We are going to run our interactive shells from our EC2 instance, meaning that this instance is playing the roles of development environment, master, and slave at the same time.

- From the command line, run an interactive shell and specify the master URL of your minimal cluster.

- Refresh each UI web page. You should see a new application (the shell) in the Master UI, and a new executor in the Worker UI.

- Next, run our simple line count example in the shell.

- This time, the shell makes use of the Spark cluster to process the data. Your application is sent to the master for scheduling, and the master assigns the task to the a worker for processing (here, we only have 1 worker). The time to execute is about the same as running in Local mode because our data size is so small, but improvements would be noticeable with massive amounts of data.

- As an experiment, open a second SSH window into your EC2 instance and stop the running worker. You need the worker's ID, which can be found in the Workers table of the Master UI. You can close this second window once the worker is stopped.

- Refreshing the Master UI after this command shows the Worker as DEAD. The Worker UI is also shut down and can no longer be refreshed. Additionally, trying to execute our commands in the Python shell now fails:

- After you submit this command, refresh the Master UI. The application enters a WAITING state and your shell eventually shows a warning.

- Because there are no workers to assign tasks to, your application can never complete. Hit Ctrl+C to abort your command in the interactive shell and then quit the shell.

- Refresh the Master UI one final time, and the shell moves from the Running Applications list to the Completed Applications list.

- To clean up our work, let's stop the master.

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster.

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster.

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster. Here is how you would accomplish this example inside an application.

There is no interactive shell available for Java. You should use one of the other languages to smoke test your Spark cluster.

When using a version of Spark built with Scala 2.10, the command to quit is simply "exit".

⇖ Looking Ahead: The spark-ec2 Script

Our minimal cluster employed the following official Spark scripts:

- start-master.sh / stop-master.sh: Written to run from the master instance

- start-slave.sh / stop-slave.sh: Written to run from each slave instance

In our contrived example, it was straightforward to run all of the scripts from the same overloaded instance. In a real cluster, you would need to login to each slave instance to start and stop that slave. As you can imagine, that would be quite tedious with many slaves, and there is a better way.

If you create a text file called conf/slaves in the master node's Spark home directory (/opt/spark here), and put the hostname of each slave instance in the file (1 slave per line), you can do all of your starting and stopping from the master instance with these scripts:

- start-slaves.sh / stop-slaves.sh: Start or stop the slaves listed in the slaves file.

- start-all.sh / stop-all.sh: Start or stop the slaves listed in the slaves file as well as the master.

Although managing the cluster from the master instance is better than logging into each worker, you still have the overhead of configuring and launching each instance. As you start to work with clusters of non-trivial size, you should take a look at the spark-ec2 script. This script automates all facets of cluster management, allowing you to configure, launch, start, stop, and terminate instances from the command line. It ensures that the masters and slaves running on the instances are cleanly started and stopped when the cluster is started or stopped. We explore the power of this script in the recipe, Managing a Spark Cluster with the spark-ec2 Script.

⇖ Conclusion

You have now seen the different ways that Spark can be deployed, and are almost ready to dive into doing functional, productive things with Spark. In the next tutorial, Tutorial #4: Writing and Submitting a Spark Application, we focus on the steps needed to create a Spark application and submit it to a cluster for execution. If you are done playing with Spark for now, make sure that you stop your EC2 instance so you don't incur unexpected charges.

Reference Links

- Spark Standalone Mode in the Spark Programming Guide

- Running the Spark Interactive Shells in the Spark Programming Guide

Change Log

- 2016-09-20: Updated for Spark 2.0.0. Code may not be backwards compatible with Spark 1.6.x (SPARKOUR-18).

Spot any inconsistencies or errors? See things that could be explained better or code that could be written more idiomatically? If so, please help me improve Sparkour by opening a ticket on the Issues page. You can also discuss this recipe with others in the Sparkour community on the Discussion page.