Tutorial #4: Writing and Submitting a Spark Application

Synopsis

This tutorial takes you through the common steps involved in creating a Spark application and submitting it to a Spark cluster for execution. We write and submit a simple application and then review the examples bundled with Apache Spark.

Prerequisites

- You need a development environment with Apache Spark installed, as described in Tutorial #2: Installing Spark on Amazon EC2. You can opt to use the EC2 instance we created in that tutorial. If you have stopped and started the instance since the previous tutorial, you need to make note of its new dynamic Public IP address.

Tutorial Goals

- You will understand the steps required to write Spark applications in a language of your choosing.

- You will understand how to bundle your application with all of its dependencies for submission.

- You will be able to submit applications to a Spark cluster (or Local mode) with the spark-submit script.

Section Links

⇖ Installing a Programming Language

Spark imposes no special restrictions on where you can do your development. The Sparkour recipes will continue to use the EC2 instance created in a previous tutorial as a development environment, so that each recipe can start from the same baseline configuration. However, you probably already have a development environment tuned just the way you like it, so you can use it instead if you prefer. You'll just need to get your build dependencies in order.

- Regardless of which language you use, you'll need Apache Spark and a Java Runtime Environment (8 or higher) installed. These components allow you to submit your application to a Spark cluster (or run it in Local mode).

- You also need the development kit for your language. If developing for Spark 2.x, you would want a minimum of Java Development Kit (JDK) 8, Python 3.0, R 3.1, or Scala 2.11, respectively. You probably already have the development kit for your language installed in your development environment.

- Finally, you need to link or include the core Spark libraries with your application. If you are using an Integrated Development Environment (IDE) like

Eclipse or IntelliJ, the official Spark documentation provides instructions for

adding Spark dependencies in Maven. If you don't use Maven,

you can manually track down the dependencies in your installed Spark directory:

- Java and Scala dependencies can be found in jars (or lib for Spark 1.6).

- Python dependencies can be found in python/pyspark.

- R dependencies can be found in R/lib.

Instructions for installing each programming language on your EC2 instance are shown below. If your personal development environment (outside of EC2) does not already have the right components installed, you should be able to review these instructions and adapt them to your unique environment.

If you intend to write any Spark applications with Java, you should consider updating to Java 8 or higher. This version of Java introduced Lambda Expressions which reduce the pain of writing repetitive boilerplate code while making the resulting code more similar to Python or Scala code. Sparkour Java examples employ Lambda Expressions heavily, and Java 7 support may go away in Spark 2.x. The Amazon Linux AMI comes with Java 7, but it's easy to switch versions:

Because the Amazon Linux image requires Python 2 as a core system dependency, you should install Python 3 in parallel without removing Python 2.

To complete your installation, set the PYSPARK_PYTHON environment variable so it takes effect when you login to the EC2 instance. This variable determines which version of Python is used by Spark.

You need to reload the environment variables (or logout and login again) so they take effect.

The R environment is available in the Amazon Linux package repository.

Scala is not in the Amazon Linux package repository, and must be downloaded separately. You should use the same version of Scala that was used to build your copy of Apache Spark.

- Pre-built distributions of Spark 1.x use Scala 2.10.

- Pre-built distributions of Spark 2.4.1 and earlier use Scala 2.11.

- Pre-built distributions of Spark 2.4.2 use Scala 2.12.

- Pre-built distributions of Spark 2.4.3 and later use Scala 2.11.

To complete your installation, set the SCALA_HOME environment variable so it takes effect when you login to the EC2 instance.

You need to reload the environment variables (or logout and login again) so they take effect.

These instructions do not cover Java and Scala build tools (such as Maven and SBT) which simplify the compiling and bundling steps of the development lifecycle. Build tools are covered in Building Spark Applications with Maven and Building Spark Applications with SBT.

⇖ Writing a Spark Application

- Download and unzip the example source code for this tutorial. This ZIP archive contains source code in all supported languages. Here's how you would do this on our EC2 instance:

- The example source code for each language is in a subdirectory of src/main with that language's name. Locate the source code for your programming language and open the file in a text editor.

- The application is decidedly uninteresting, merely initializing a SparkSession, printing a message, and stopping the SparkContext. We are more concerned with getting the application executed right now, and will create more compelling programs in the next tutorial.

⇖ Bundling Dependencies

When you submit an application to a Spark cluster, the cluster manager distributes the application code to each worker so it can be executed locally. This means that all dependencies need to be included (except for Spark and Hadoop dependencies, which the workers already have copies of). There are multiple approaches for bundling dependencies, depending on your programming language:

The Spark documentation recommends creating an assembly JAR (or "uber" JAR) containing your application and all of the dependencies. You can also use the --packages parameter with a comma-separated list of Maven dependency IDs and Spark will download and distribute the libraries from a Maven repository. Finally, you can also specify a comma-separated list of third-party JAR files with the --jars parameter in the spark-submit script. Avoiding the assembly JAR is simpler, but has a performance trade-off if many separate files are copied across the workers.

You can use the --py-files parameter in the spark-submit script and pass in .zip, .egg, or .py dependencies.

You can load additional libraries when you initialize the SparkR package in your R script.

The Spark documentation recommends creating an assembly JAR (or "uber" JAR) containing your application and all of the dependencies. You can also use the --packages parameter with a comma-separated list of Maven dependency IDs and Spark will download and distribute the libraries from a Maven repository. Finally, you can also specify a comma-separated list of third-party JAR files with the --jars parameter in the spark-submit script. Avoiding the assembly JAR is simpler, but has a performance trade-off if many separate files are copied across the workers.

In the case of the --py-files or --jars parameters, you can also use URL schemes such as ftp: or hdfs: to reference files stored elsewhere, or local: to specify files that are already stored on the workers. See the documentation on Advanced Dependency Management for more details.

The simple application in this tutorial has no dependencies besides Spark itself. We cover the creation of an assembly JAR in Building Spark Applications with Maven and Building Spark Applications with SBT.

⇖ Submitting the Application

Here are examples of how you would submit an application in each of the supported languages. For JAR-based languages (Java and Scala), we would pass in an application JAR file and a class name. For interpreted languages, we just need the top-level module or script name. Everything after the expected spark-submit arguments are passed into the application itself.

- It's time to submit our simple application. It has no dependencies or application arguments, and we'll run it in Local mode, which means that a brand new Spark engine spins up to execute the code, rather than relying upon an existing Spark cluster.

- The complete set of low-level commands required to build and submit are explicitly shown here for your awareness. In tutorials and recipes that follow, these commands are simplified through a helpful build script.

- Submitting your application should result in a brief statement about Spark.

Deploy Modes

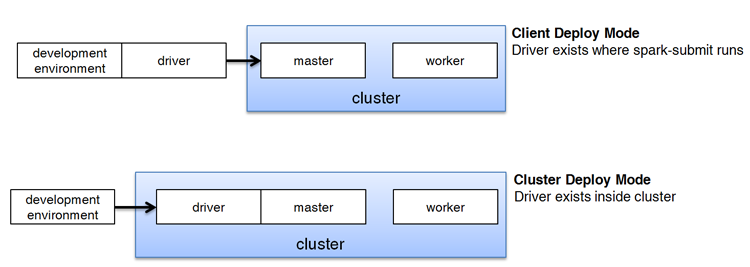

The spark-submit script accepts a --deploy-mode parameter which dictates how the driver is set up. Recall from the previous recipe that the driver contains your application and relies upon the completed tasks of workers to successfully execute your code. It's best if the driver is physically co-located near the workers to reduce any network latency.

- If the location where you are running spark-submit is sufficiently close to the cluster already, the client deploy mode simply places the driver on the same instance that the script was run. This is the default approach.

- If the spark-submit location is very far from the cluster (e.g., your cluster is in another AWS region), you can reduce network latency by placing the driver on a node within the cluster with the cluster deploy mode.

You can visualize these modes in the image below.

Configuring spark-submit

If you find yourself often reusing the same configuration parameters, you can create a conf/spark-defaults.conf file in the Spark home directory of your development environment. Properties explicitly set within a Spark application (on the SparkConf object) have the highest priority, followed by properties passed into the spark-submit script, and finally the defaults file.

Here is an example of setting the master URL in a defaults file. More detail on the available properties can be found in the official documentation.

⇖ Spark Distribution Examples

Now that we have successfully submitted and executed an application, let's take a look at one of the examples included in the Spark distribution.

- The source code for the JavaWordCount application can be found in the org.apache.spark.examples package. You can view the source code, but be aware that this is just a reference copy. Any changes you make to it will not affect the already compiled application JAR.

- To submit this application in Local mode, you use the spark-submit script, just as we did with the Python application.

- Spark also includes a quality-of-life script that makes running Java and Scala examples simpler. Under the hood, this script ultimately calls spark-submit.

- Source code for the Python examples can be found in $SPARK_HOME/examples/src/main/python/. These examples have no dependent packages, other than the basic pyspark library.

- Source code for the R examples can be found in $SPARK_HOME/examples/src/main/r/. These examples have no dependent packages, other than the basic SparkR library.

- Source code for the Scala examples can be found in $SPARK_HOME/examples/src/main/scala/. These examples follow the same patterns and directory organization as the Java examples.

⇖ Conclusion

You have now been exposed to the application lifecycle for applications that use Spark for data processing. It's time to stop poking around the edges of Spark and actually use the Spark APIs to do something useful to data. In the next tutorial, Tutorial #5: Working with Spark Resilient Distributed Datasets (RDDs), we dive into the Spark Core API and work with some common transformations and actions. If you are done playing with Spark for now, make sure that you stop your EC2 instance so you don't incur unexpected charges.

Reference Links

- Linking with Spark in the Spark Programming Guide

- Self-Contained Applications (in Java, Python, and Scala) in the Spark Programming Guide

- Submitting Applications in the Spark Programming Guide

- Loading Default Configurations in the Spark Programming Guide

- Building Spark Applications with Maven

- Building Spark Applications with SBT

Change Log

- 2016-03-15: Updated with instructions for all supported languages instead of Python alone (SPARKOUR-4).

- 2016-09-20: Updated for Spark 2.0.0. Code may not be backwards compatible with Spark 1.6.x (SPARKOUR-18).

- 2019-01-22: Updated for Python 3.x (SPARKOUR-33).

Spot any inconsistencies or errors? See things that could be explained better or code that could be written more idiomatically? If so, please help me improve Sparkour by opening a ticket on the Issues page. You can also discuss this recipe with others in the Sparkour community on the Discussion page.