2019-01-06 Happy New Year! All recipes have been updated and tested against Spark 2.4.0.

I have also incorporated some behind-the-scenes automation to streamline regression testing and make it easier for me

to stay in sync with future Spark releases.

2018-05-27 All recipes have been updated and tested against Spark 2.3.0 and Scala 2.11.12.

2017-08-05 All recipes have been updated and tested against Spark 2.2.0.

2017-05-29 All recipes have been updated and tested against Spark 2.1.1 and Scala 2.11.11.

2016-10-09 All recipes have been updated and tested against Spark 2.0.1.

2016-09-24

Understanding the SparkSession in Spark 2.0

introduces the new

SparkSession class from Spark 2.0, which provides a unified entry point

for all of the various Context classes previously found in Spark 1.x.

2016-09-22

Installing and Configuring Apache Zeppelin

explains how to install Apache Zeppelin and configure it to work with Spark. Interactive notebooks

such as Zeppelin make it easier for analysts (who may not be software developers) to harness the power of Spark

through iterative exploration and built-in visualizations.

2016-09-21

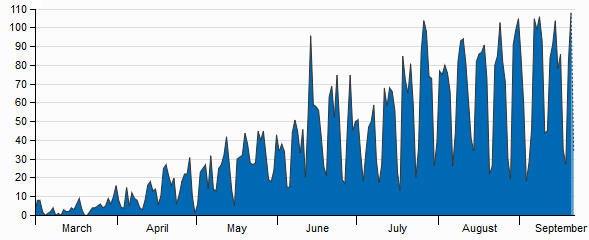

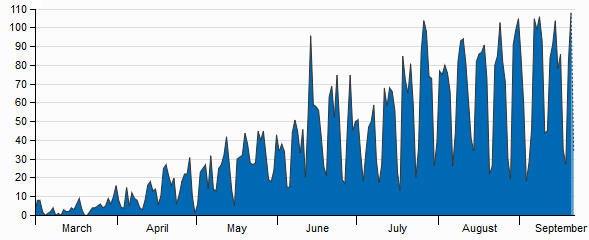

Website statistics prove that developers love Sparkour (except on the weekends). Thanks for your continued support!

2016-09-20 All recipes have been updated to use the new features available in Spark 2.0.0,

such as the SparkSession and the new Accumulator API. I will focus exclusively on Spark 2.x

in future recipes, as that is the release most in need of solid documentation.

2016-06-28 All recipes have been updated and tested against Spark 1.6.2.

2016-05-07

Aggregating Results with Spark Accumulators

explains how to use accumulators to aggregate results in a Spark application.

Accumulators provide a safe way for multiple Spark workers to contribute information to

a shared variable, which can then be read by the application driver.

2016-05-04

Controlling the Schema of a Spark DataFrame

demonstrates different strategies for defining the schema of a DataFrame built from various data sources (using

RDD and JSON as examples). Schemas can be inferred from metadata or the data itself, or programmatically specified in advance

in your application.

2016-04-14

Improving Spark Performance with Broadcast Variables

explains how to use broadcast variables to distribute immutable reference data across a Spark cluster. Using

broadcast variables can improve performance by reducing the amount of network traffic and data serialization required

to execute your Spark application.

2016-04-13

Configuring an S3 VPC Endpoint for a Spark Cluster

shows how to set up a VPC Endpoint for Amazon S3, which allows your Spark cluster to interact with S3 resources from a private subnet

without a Network Address Translation (NAT) instance or Internet Gateway.

2016-04-07 All recipes have been updated and tested against Spark 1.6.1.

2016-03-29

Building Spark Applications with Maven

covers the use of Apache Maven to build and bundle Spark applications

written in Java or Scala. It focuses very narrowly on a subset of commands relevant to Spark applications, including

managing library dependencies, packaging, and creating an assembly JAR file.

2016-03-24

Using JDBC with Spark DataFrames

shows how Spark DataFrames can be read from or written to relational database tables with Java Database Connectivity (JDBC).

2016-03-17

Building Spark Applications with SBT

covers the use of SBT (Simple Build Tool or, sometimes, Scala Build Tool) to build and bundle Spark applications

written in Java or Scala. It focuses very narrowly on a subset of commands relevant to Spark applications, including

managing library dependencies, packaging, and creating an assembly JAR file with the

sbt-assembly plugin.

2016-03-11

Working with Spark DataFrames

provides a straightforward introduction to the Spark DataFrames API. It uses common DataFrames operators to explore and transform raw data

from the 2016 Democratic Primary in Virginia.

2016-03-06

Configuring Spark to Use Amazon S3

provides the steps needed to securely connect an Apache Spark cluster running on Amazon EC2 to data stored in Amazon S3.

It contains instructions for both the classic

s3n protocol and the newer, but still maturing,

s3a protocol.

2016-03-05

Configuring Amazon S3 as a Spark Data Source

provides the steps needed to securely expose data in Amazon S3 for consumption by a Spark application.

The resultant configuration works with both supported S3 protocols in Spark: the classic

s3n

protocol and the newer, but still maturing,

s3a protocol.

2016-03-04

Managing a Spark Cluster with the spark-ec2 Script

describes how to automatically launch, start, stop, or destroy a Spark cluster running in Amazon EC2.

It steps through the pre-launch configuration, explains the script's most common parameters, and points out where

specific parameters can be found in the AWS Management Console.

2016-03-01

Welcome to Sparkour!

To kick things off, I have released 5 sequential

tutorials, which should give you a solid foundation for mastering Spark.

My long-term goal is to publish a few new recipes each month until the end of time. Help me improve Sparkour

by submitting bugs and improvement suggestions on the

Issues page.

2016-02-15 The idea for Sparkour was conceived during the President's Day ice storm.

Brian Uri! has over 20 years of experience in software engineering, proposal writing, and government data standards,

with relevant certifications in Amazon Web Services and Apache Hadoop.

Brian Uri! has over 20 years of experience in software engineering, proposal writing, and government data standards,

with relevant certifications in Amazon Web Services and Apache Hadoop.